Index

1. Scenario Map of the System

2. Verification and Scoring for the Platform

3. User Interface Mock-up

4.Visualization Models

4.a. Google Street Map Visualzation Model

4.b. Eye-level Visualzation Model (4 parts)

5. Iteration Suggested during Semester's Critiques

6. Iteration Execution

6.a. Chronology Display

6.b. Automated Stitching of two Reference Maps

6.c Time Stamp

6.c Time Stamp

6.d Satellite Map

1. Scenario Map

This is a layout of my scenario map.

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

2. Verification and Scores

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

3. User interface Mockup

I started modeling this mockup for a UI for Eyewitness platform when I first met with David Ascher. Since then I'm adding and removing elements from it. It comprises the essential component of the platform.

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

4. Visualization Models

4.a Google Street View

4.b Eye-level Map

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

4.a Google Street View Visualization Model

Two reviewers feedback (C. heathrington and J.Cooperstock) suggested that I consider google street view to be my Master Reference Map, against which all reference maps will be attached. So I carried on an experiment to experiment that idea.

Notes:

One / Multi perspective on occupy vancouver against a static background of Google Street View

* if it is from different perspectives (camera angels)… google street can't accommodate that -currently-since it doesn't allow a vertical movement to look at streets from an aerial view, or a worm view.

It can only accommodate an eye-level view

* I’m looking right now at ways to have a google street reference map, that's a reference to the video reference map.

Results:

1- Adjusted to the HMV sign (right)

2-Adjusted to the street white line

3- Adjusted to be parallel to the left white line

The video preview shows the difficulty of geo-registring the target video against a google street map. In other words the street map can’t act as the reference map. In the video, there are three attempts; the first I tried to register the video against the left section of the google street map, the second, to the middle section of the google street map and the third to the white line. At the end of the video there is a final attempt where I rotate both (the reference map and the target video) as a way to try to adjust both relatively so that they preview a better, more concise relation between the target video and the reference. But that didn’t work.

Conclusion:

1. Target video against Google street view from eye-level perspective don’t work well -at all- together.

2. The best is that the master reference is a 3D reconstruction. A matter suggested by UCLA team, and prof. Zakhor from the same university (maybe).

––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

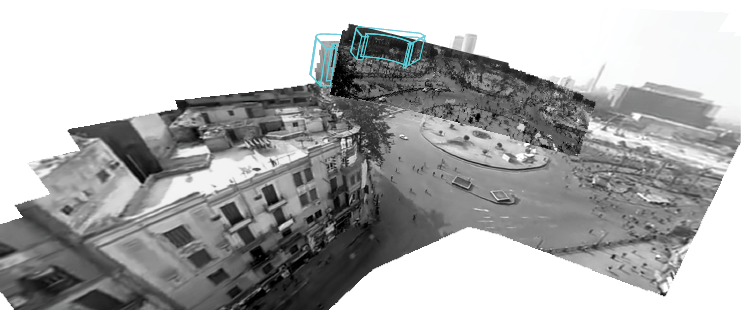

4.b Eye-level Map

Visualization Model

I had many comments regarding modeling the Eyewitness visualization from an eye-level view, not a top-down view. It is a relevant point since many of citzen media videos are shot from a normal perspective. I decided then to model an Eyewitness reference map, and video from this perspective. The following are parts of my process to achieve that visualization model.

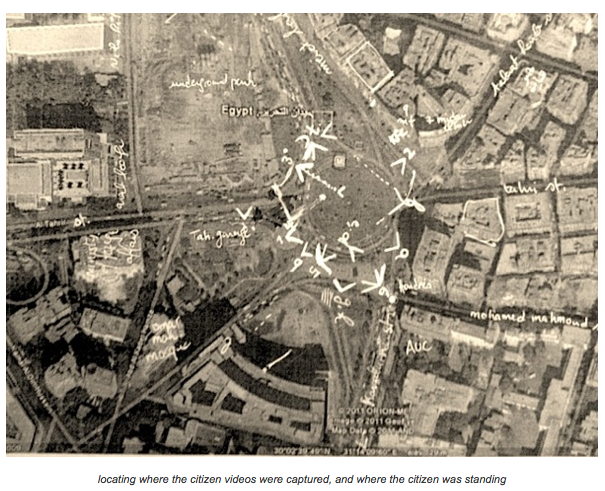

Wikimapia of tahrir square area

(Part 2)

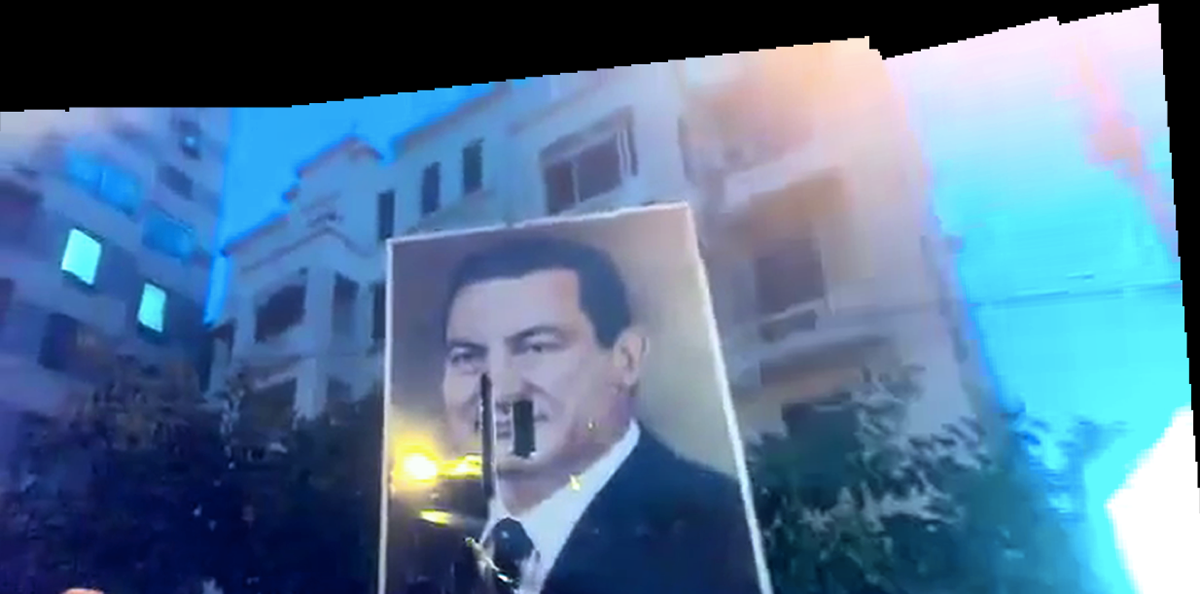

I'll aggragate citizen media videos captured in Cairo during a politically charged events, transform them into stills, then start synthesizing them. The result wil reveal the spaces and scopes that inform us about what and where, did events unfold, based on the citizen's record' that is citzen media.

The map might reveal one or some of the following: where the witnesses were standing, the diversity of views capturing an event, the place of the event and how it changed over the course of the day (layering the change of the space, in relation to time). A certain relations between, the visualization of the space, the plotting, and the event will be revealed, but it’s not yet definitive what are these relations.

A- The day was chosen based on the ease of verifying the event date and the video posting date. Hence, Jan 25, was the chosen date.

B- Aggregate videos from different locations in the same day. But that will probably make it harder to construct a map of them. So I'm thinking of aggregating from specific locations (Egypt > Cairo >> Tahrir)

Not knowing where the video was captured is an issue. It stands in the way of experimenting with the synthesize of reference maps.

Starting the experimentation process, I tried to relate/ detect where the different reference maps are located. Still some locations are unknown.

Part 3

Started aggreagating the videos. I went throught the first 10 pages of google videos search. I used a specific formula to look up the videos that were uploaded on January 25. I chose the videos that were captured by citizens rather that news corporations. So all news reports were out of my selection. Some of them were captured in the day time and others in night time. At this stage I aggreagated both.

Surprisingly they weren't many. About 8 videos. That might be because I excluded the videos that might have been captured on January 25, but were uploaded a day after.

Some gepgraphical maps were used in the process, to help locate where the videos were shot and where the person who captured was standing.

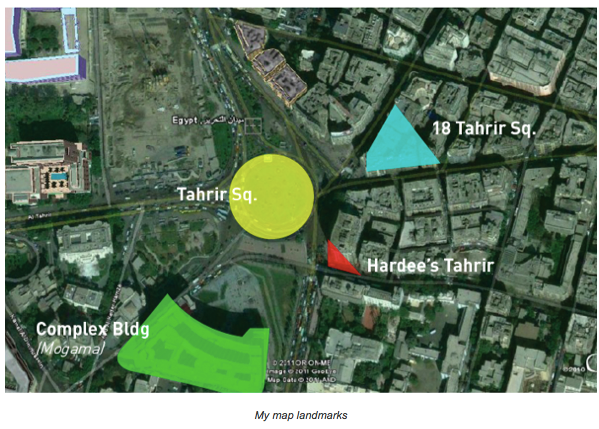

Started looking at the refrence map and defining common landmarks, These were: Tahrir sq., complex building (mogama'), 18 Tahrir sq., and Hardee's Tahrir.

Part 4

Conclusive Notes

Criteria Defined by the Synthestic Map Model:

- The blue print need to contain landmarks, buildings

for each main location (e.g. tahrir sq., mostafa mahmud, mphamed mahmud)

define landmarks (e.g. hardees, the 3 common buildings, magma, metro sign)

for each main location (e.g. tahrir sq., mostafa mahmud, mphamed mahmud)

define landmarks (e.g. hardees, the 3 common buildings, magma, metro sign)

Matters concluded:

- Night views with fire in it, is hard to construct a reference map from it.

That would mean that the participant of the platform can stitch by, arrange it by hand. have more booms for interacting, choosing a night view.

That would mean that the participant of the platform can stitch by, arrange it by hand. have more booms for interacting, choosing a night view.

- Picking the jan 25, is good for modelling and figuring out a map

but a model for another suspicious day maybe will be executed at some point. to recognize problems, associated with being not sure about the date. or for finding out ways to eliminate wrong portions or to detect wrong options.

but a model for another suspicious day maybe will be executed at some point. to recognize problems, associated with being not sure about the date. or for finding out ways to eliminate wrong portions or to detect wrong options.

(If I would to nail it down to a concrete format for this period, of the end of the 3rd semwster. I'll go on with with Jan 25 model. and suppose that the crowd will be able to verify, the date. or the original uploader of the content will be guided on how to know about the original date of the video. from his computer. )

Pushing the Night View further:

Tried to pick images that are obviously complementing one another to create a landscape. yet the program can't detect it as well.

–––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

5. Iteration

Video preview scale

Use it and allow it for a Different normal scenario (> take participant in a tour in groups or separately and let each capture a place or event and I put them down together for them and they can see the effect of the platform in constructing a collective view on the place)

Platform suggests to stitch refmaps based on the defined landmarks :: puzzles, computing, internet)

Every one has a different role. and each role have different requirement :: in home page, ask the participants what are you good at; good at it all, good in detecting important evidence and videos, you're good in puzzling, videos, aligning)

Time chronology at the bottom

Map from the top 2 to inform user about the overall location of the events,

have some icon moving around the map that'll inform the user about the space.

have some icon moving around the map that'll inform the user about the space.

Signal How many have verified the video

Binocular Icon replace it

User can create an event. Create an event (the user scenario)

Let the visual area dominate by giving less importance to the icons

Replace the vertical line by an empty space

the bottom menu make it smaller . Tuck it when the video is playing

Protect the identity of the witness and witnessed (obscura cam)

Engaging the first person

Twitter feeds

Replace synthesis with Alignment. Placement

Lead users to the gaps existing (e.g. wikipedia this article need more )

Redefine persons and limit'em to maximum 5

–––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––––

6. Iteration Execution

6.a. Chronology Display

6. Iteration Execution

6.a. Chronology Display

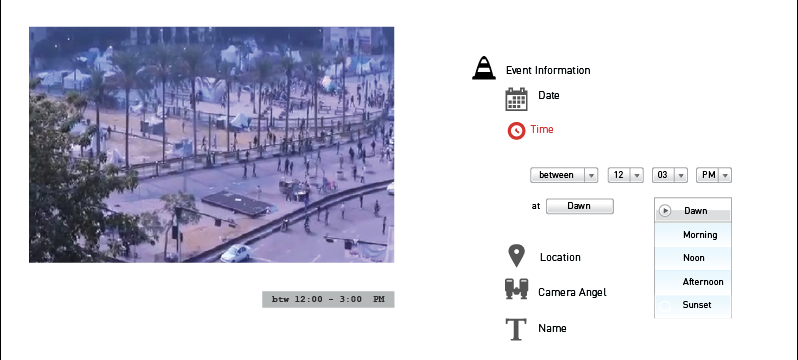

Incorporating the cultural aspect of prayers to help identify video's time.

Other factors that can help in time identitfaction (based on my observations) are:

Other factors that can help in time identitfaction (based on my observations) are:

- Density

- Video Lighting (expsure)

- Chants

- Protest Banners

In the UI pickng the time of the event will be displayed in 4 pages;

the event info,

stitching reference maps,

stitching videos, and

previewing events.

6. Iteration Execution

6.b. Automated Stitching

6.b. Automated Stitching

of two Reference Maps

If landmarks are registered in an earlier stage, then it's possible to benefit from this info to help the participant stitch two reference maps

Such as the case in this example, where Mogame' Building is used as a reference landmark

It's possile that the platform wouldn't be able to accurately adjsut it

the partiicpant is asked then to approve or disapprove the automated stitiching

6. Iteration Execution

6.c Time Stamp

Many of the reviewers on Nov 15 suggested to include a time stamp to the eyewitness videos . Here are my thoughts so far.

The indicated time is approximate. Thinking of courrier to give an official feel.

Place it superimposed on it or on its edge.

Displaying possibility in case of two videos or reference maps

Displayed in a box or...

Distributed on a time line

Suugestion to include a map indicating where were the videos captured

6. Iteration Execution

6.d Satellite Map

Still a challenge as the size of the reference maps is downscaled in order to fit the desktop screen, the satellite map occupies a large portion of the screen. Downsizing the satellitte map is not a soluition, cause that will affect the readibility of the location.

Synchronosing the preview of the videos with time line, and google satellite map

Week's conclusion:

Any visuals added to an Eyewitness video is competing with it. A solution might be tucking the elements when previewing a video. Whenever the viewer hover over an eywitness video, information such as timestamp and verification numbers will pop-up.